|

I am a PhD student at the Robotics Institute at Carnegie Mellon University advised by Professor Chris Atkeson. I'm interested in making useful household robots that can efficiently learn new tasks. I previously interned with the embodied AI group at Meta Reality Labs Research and Fundamental AI Research (FAIR) working with Ruta Desai on grounding large language models for task planning. I received my B.S. in Mathematics-Computer Science from UC San Diego where I worked with Professor Michael Yip on surgical robotics and motion planning. I've also worked with Brain Corp as a research intern. |

|

|

My goal is to build efficient robot task learning systems by leveraging large internet datasets and pretrained models. My thesis is split into two parts, improving robot task planning with large pretrained models (Large Language Models and Vision Language Models) and augmenting robot skill learning from human videos and other internet data. I'm working on improving both these components of task learning, and integrating them into a full task learning system capable of efficiently aquiring and executing new tasks. |

|

|

|

Grounding LLMs for Robot Task Planning

Large language models have shown promise in enabling robots to reason and plan in human environments. Unfortunately, current multimodal LLMs are limited in their ability to consider visual representations of task history or state such as images or video when constructing plans. I am developing methods to overcome this limitation and enable LLMs to attend to the relevant portions of long visual histories for task planning. Solving this problem is cruicial to enable robots to recover from mistakes and to enable robots to effctively collaborate with humans. |

|

Learning Robot Skills from Internet Data

Real robot data is one of the hardest forms of data to collect. Robots that can accelerate their learning with human videos and other internet data can learn tasks more efficeintly. In this work, im studying what are the best forms of internet data to learn from, how to effectively use them, and how to compensate for the lack of physical information in internet data. |

|

|

|

Mrinal Verghese and Christopher Atkeson The International Conference on Robotics and Automation (ICRA), 2025 This study explores the utility of various internet data sources to select among a set of template robot behaviors to perform skills. Learning contact-rich skills involving tool use from internet data sources has typically been challenging due to the lack of physical information such as contact existence, location, areas, and force in this data. Prior works have generally used internet data and foundation models trained on this data to generate low-level robot behavior. We hypothesize that these data and models may be better suited to selecting among a set of basic robot behaviors to perform these contact-rich skills. We explore three methods of template selection: querying large language models, comparing video of robot execution to retrieved human video using features from a pretrained video encoder common in prior work, and performing the same comparison using features from an optic flow encoder trained on internet data. Our results show that LLMs are surprisingly capable template selectors despite their lack of visual information, optical flow encoding significantly outperforms video encoders trained with an order of magnitude more data, and important synergies exist between various forms of internet data for template selection. By exploiting these synergies, we create a template selector using multiple forms of internet data that achieves a 79% success rate on a set of 16 different cooking skills involving tool-use. |

|

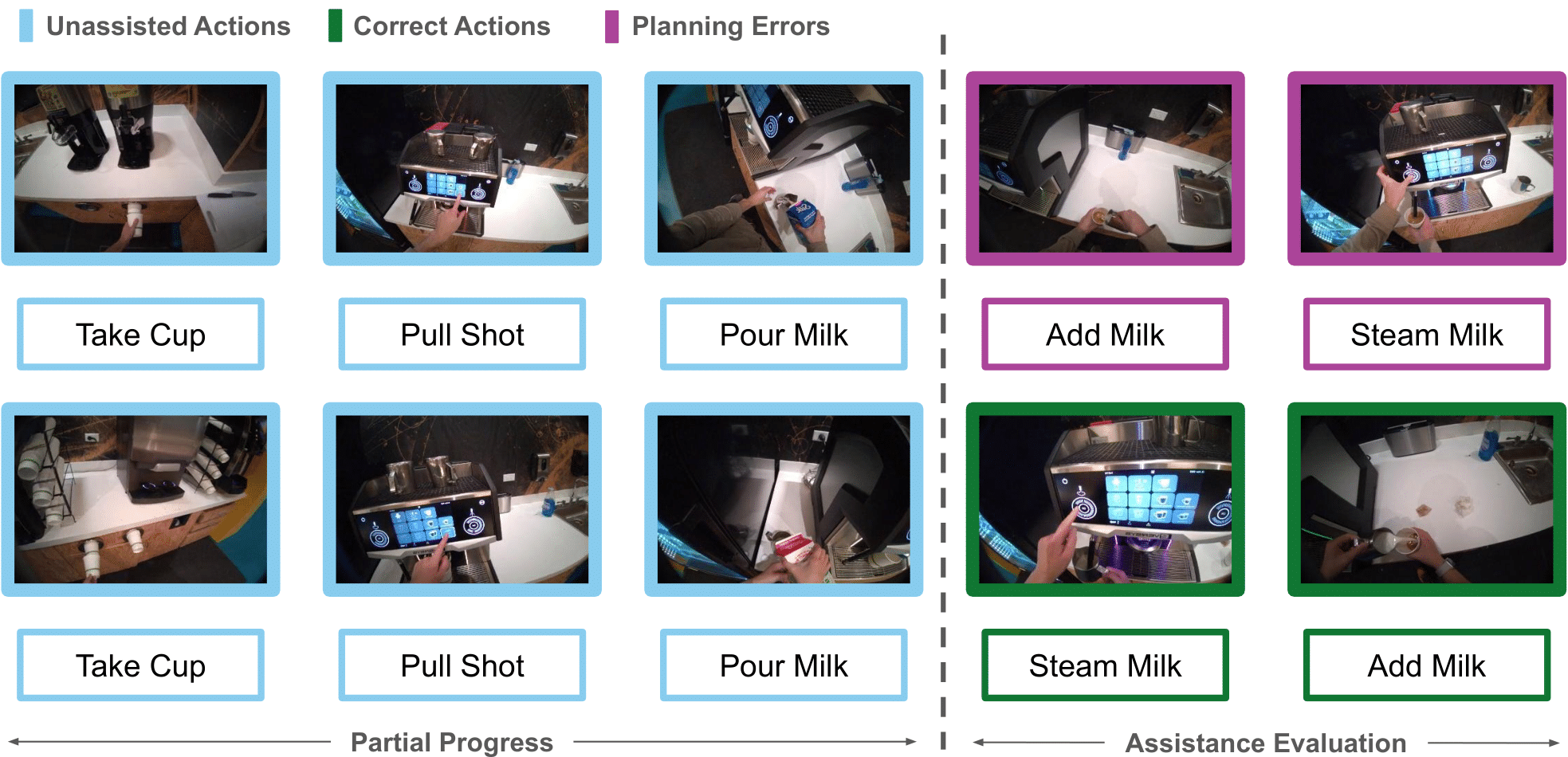

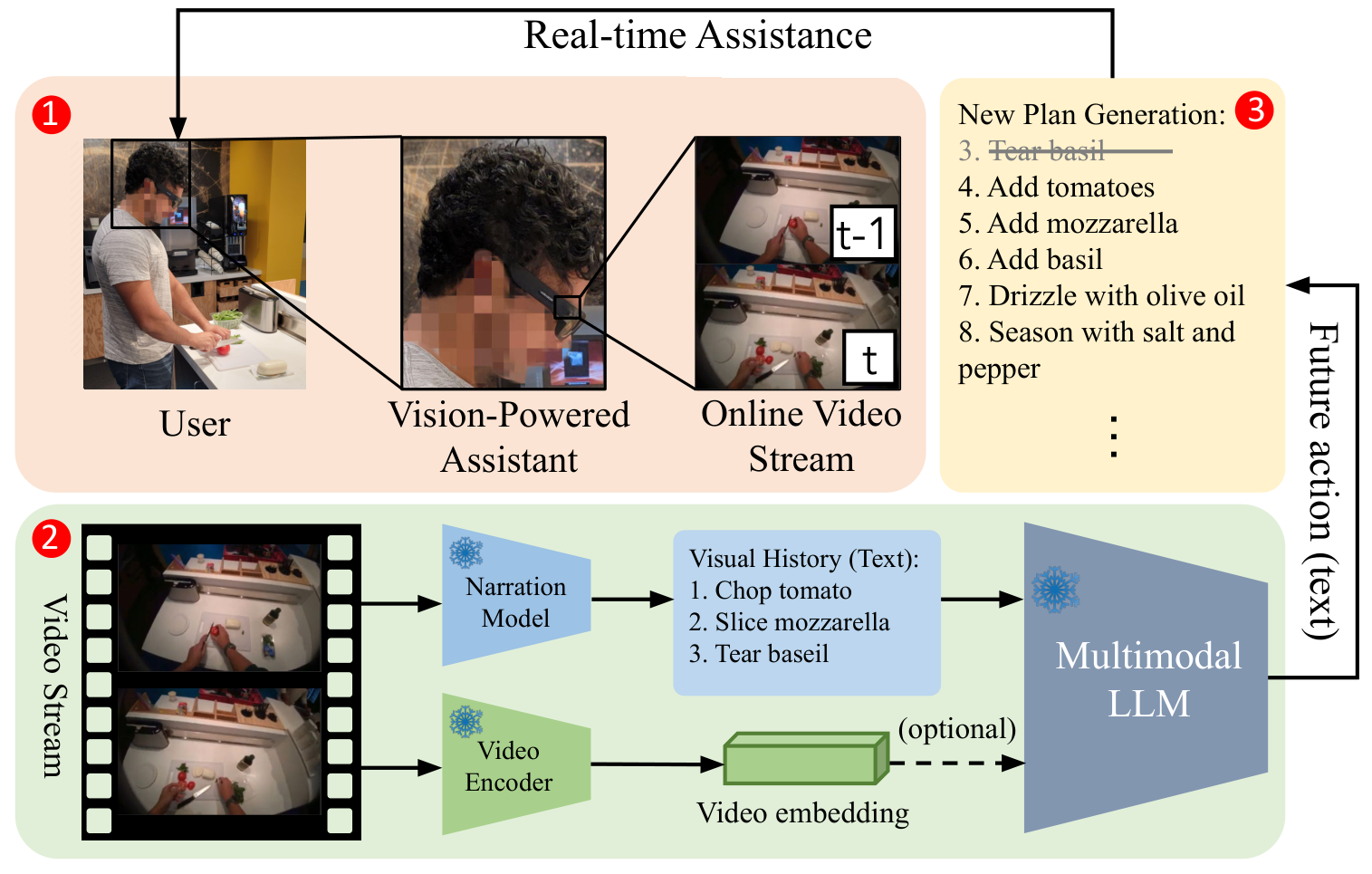

Mrinal Verghese*, Brian Chen*, Hamid Eghbalzadeh, Tushar Nagarajan, and Ruta Desai IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2025 (Oral) Our research investigates the capability of modern multimodal reasoning models, powered by Large Language Models (LLMs), to facilitate vision-powered assistants for multi-step daily activities. Such assistants must be able to 1) encode relevant visual history from the assistant's sensors, e.g., camera, 2) forecast future actions for accomplishing the activity, and 3) replan based on the user in the loop. To evaluate the first two capabilities, grounding visual history and forecasting in short and long horizons, we conduct benchmarking of two prominent classes of multimodal LLM approaches -- Socratic Models and Vision Conditioned Language Models (VCLMs) on video-based action anticipation tasks using offline datasets. These offline benchmarks, however, do not allow us to close the loop with the user, which is essential to evaluate the replanning capabilities and measure successful activity completion in assistive scenarios. To that end, we conduct a first-of-its-kind user study, with 18 participants performing 3 different multi-step cooking activities while wearing an egocentric observation device called Aria and following assistance from multimodal LLMs. We find that the Socratic approach outperforms VCLMs in both offline and online settings. We further highlight how grounding long visual history, common in activity assistance, remains challenging in current models, especially for VCLMs, and demonstrate that offline metrics do not indicate online performance. |

|

Mrinal Verghese and Christopher Atkeson The International Conference on Robotics and Automation, 2023 Tasks with constraints or dependencies between states and actions, such as tasks involving locks or other mechanical blockages, have posed a significant challenge for reinforcement learning algorithms. The sequential nature of these tasks makes obtaining final rewards difficult, and transferring information between task variants using continuous learned values such as weights rather than discrete symbols can be inefficient. In this work we propose a memory-based learning solution that leverages the symbolic nature of the constraints and temporal ordering of actions in these tasks to quickly acquire and transfer high-level information about the task constraints. We evaluate the performance of memory-based learning on both real and simulated tasks with discontinuous constraints between states and actions, and show our method learns to solve these tasks an order of magnitude faster than both model-based and model-free deep reinforcement learning methods. |

|

|

Mrinal Verghese, Nikhil Das, Yuheng Zhi, Michael Yip Robotics and Automation Letters, 2022 Real-time robot motion planning in complex high-dimensional environments remains an open problem. Motion planning algorithms, and their underlying collision checkers, are crucial to any robot control stack. Collision checking takes up a large portion of the computational time in robot motion planning. Existing collision checkers make trade-offs between speed and accuracy and scale poorly to high-dimensional, complex environments. We present a novel space decomposition method using K-Means clustering in the Forward Kinematics space to accelerate proxy collision checking. We train individual configuration space models using Fastron, a kernel perceptron algorithm, on these decomposed subspaces, yielding compact yet highly accurate models that can be queried rapidly and scale better to more complex environments. We demonstrate this new method, called Decomposed Fast Perceptron (D-Fastron), on the 7-DOF Baxter robot producing on average 29x faster collision checks and up to 9.8x faster motion planning compared to state-of-the-art geometric collision checkers. |

|

Mrinal Verghese, Florian Richter, Aaron Gunn, Phil Weissbrod, Michael Yip The Internationl Symposium on Robotics Research, 2019 We present an orientation adaptive controller to compensate for the effects of highly constrained environments on continuum manipulator actuation. A transformation matrix updated using optimal estimation techniques from optical flow measurements captured by the distal camera is composed with any Jacobian estimation or kinematic model to compensate for these effects. |

|

|

|

Spring 2023 (Co-Teaching) This seminar course will cover a mixture of modern and classical methods for robot cognition. We will review papers related to task planning and control using both symbolic and numeric methods. The goal of this course is to give students an overview of the current state of research on robot cognition. |

|

Spring 2023 (Teaching Assistant) This is a course about how to make robots move through and interact with their environment with speed, efficiency, and robustness. We will survey a broad range of topics from nonlinear dynamics, linear systems theory, classical optimal control, numerical optimization, state estimation, system identification, and reinforcement learning. The goal is to provide students with hands-on experience applying each of these ideas to a variety of robotic systems so that they can use them in their own research. |

|

Spring 2022 (Teaching Assistant) This course surveys perception, cognition, and movement in humans, humanoid robots, and humanoid graphical characters. Application areas include more human-like robots, video game characters, and interactive movie characters. |

|

Spring 2020 (Teaching Assistant) This course will cover the basics about neural networks, as well as recent developments in deep learning including deep belief nets, convolutional neural networks, recurrent neural networks, long-short term memory, and reinforcement learning. We will study details of the deep learning architectures with a focus on learning end-to-end models for these tasks, particularly image classification. |

|

Winter 2020 (Teaching Assistant) This course introduces the mathematical formulations and algorithmic implementations of the core supervised machine learning methods. Topics in 118A include regression, nearest neighborhood, decision tree, support vector machine, and ensemble classifiers. |

|

|